Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

How to use PHP combined with AI to achieve automatic summary. PHP long articles quickly generate summary

How to use PHP combined with AI to achieve automatic summary. PHP long articles quickly generate summary

How to use PHP combined with AI to achieve automatic summary. PHP long articles quickly generate summary

Jul 25, 2025 pm 08:36 PMThe core of using PHP to combine AI to achieve automatic digest is to call AI service APIs, such as OpenAI or cloud platform NLP services; 2. Specific steps include obtaining API keys, preparing plain text, sending POST requests with curl, parsing JSON responses and displaying the digest; 3. The digest can efficiently filter information, improve readability, assist in content management and adapting to fragmented reading; 4. When choosing a model, you need to consider the abstract type (extracted or generated), cost, language support, document ease of use and data security; 5. Common challenges include rate limiting, network timeout, text length limit, cost out of control and quality fluctuations. The response strategy includes retry mechanism, asynchronous queue, block processing, cache results and optimization prompt words.

Using PHP combined with AI to achieve automatic summary, to put it bluntly, it is to enable your PHP application to "understand" long text and cleverly extract the core content. This matter sounds a bit high-end, but the core principle is not complicated: PHP, as a bridge between the front-end and back-end, is responsible for sending the text you want to abstract to a powerful AI service (usually through the API interface), then receiving the streamlined results after AI processing, and then displaying it to the user. This can greatly improve information acquisition efficiency, especially when processing large amounts of text.

Solution

To enable PHP to implement automatic digests, the most direct and efficient way is to use the off-the-shelf AI service API. There are many AI models on the market that provide text summary functions, such as OpenAI's GPT series model, or natural language processing services provided by some cloud service providers (such as Google Cloud NLP and AWS Comprehend). All your PHP code needs to do is to play the role of "passing the microphone".

The specific operation process is usually as follows:

Select an AI service provider and get the API key: This is the basis, you need a legitimate key to call the AI interface. I personally use OpenAI more often because its model works well and the documentation is clearer.

-

Prepare the text to be summarized: Make sure the text is clean and has no extra HTML tags or special characters, as AI models usually only deal with plain text.

-

Building API request: PHP needs to send an HTTP POST request to the API endpoint of the AI service. This request usually contains your API key (in the request header or request body), as well as the text content to be summarized, and may also require specifying the length, style and other parameters of the summary.

A simplified PHP

curlexample for sending requests to OpenAI (note that this is just a schematic, and more comprehensive error handling and parameter configuration may be required in actual use):<?php // Suppose you already have the OpenAI API Key $apiKey = 'YOUR_OPENAI_API_KEY'; $textToSummarize = "Put your long text here, such as an article, a report, etc. The AI will generate a summary based on this text."; $data = [ 'model' => 'gpt-3.5-turbo', // or other models that support summary, such as text-davinci-003 (old version) 'messages' => [ [ 'role' => 'system', 'content' => 'You are a professional text summary tool. Please refine the user-provided text to generate a concise and accurate summary. ' ], [ 'role' => 'user', 'content' => 'Please generate a summary of about 200 words for the following text:' . $textToSummarize ] ], 'max_tokens' => 300, // Limit the length of the summary'temperature' => 0.7, // Controls the creativity of the generated text, 0 means more certainty]; $ch = curl_init('https://api.openai.com/v1/chat/completes'); curl_setopt($ch, CURLOPT_RETURNTRANSFER, true); curl_setopt($ch, CURLOPT_POST, true); curl_setopt($ch, CURLOPT_POSTFIELDS, json_encode($data)); curl_setopt($ch, CURLOPT_HTTPHEADER, [ 'Content-Type: application/json', 'Authorization: Bearer' . $apiKey, ]); $response = curl_exec($ch); $httpCode = curl_getinfo($ch, CURLINFO_HTTP_CODE); curl_close($ch); if ($httpCode === 200) { $responseData = json_decode($response, true); if (isset($responseData['choices'][0]['message']['content'])) { $summary = $responseData['choices'][0]['message']['content']; echo "generated summary:\n" . $summary; } else { echo "API response format is incorrect or summary is not found.\n"; // Debug: var_dump($responseData); } } else { echo "API request failed, HTTP status code: " . $httpCode . "\n"; echo "Error message:" . $response . "\n"; } ?> Parsing API response: The result returned by the AI service is usually in JSON format. You need to parse it with PHP's

json_decode()function and then extract the summary content from it.Display or store summary: After you get the summary, you can display it to the user or store it in a database for subsequent use.

Why do we need automatic summary? What pain points can it solve?

I often feel that in an era of information explosion, being able to quickly grasp the core is simply a survival skill. Facing a large number of articles, reports, and news every day, if you read each article carefully, there will be no time left. The emergence of automatic abstract technology has solved this pain point.

It allows people to:

- Efficient filtering information: Imagine you have a bunch of unread emails or press releases, and through the summary, you can quickly determine what is worth reading in depth and which can be skipped. It's like adding a smart filter to your information flow.

- Improve content readability: Long-formed discussions are often discouraged. A good summary can provide the "essence" of the article, allowing readers to understand the overview in a short time, and even stimulate their interest in reading the original text.

- Assist content creation and management: For example, you can use summary to generate an article profile, share copy on social media, or create indexes for internal documents. For content platforms, automatically generating summary can greatly reduce the amount of editing work.

- Coping with fragmented reading habits: Modern people are becoming more and more accustomed to fragmented reading, and short and concise content is more popular. The summary fits this trend.

What are the considerations for choosing the right AI model and API interface?

When choosing an AI model and API interface, you can't just grab one of them, it has to be based on your specific needs. There are quite a lot of knowledge here:

- Abstract Type: There are two main types of AI summary:

- Extractive Summarization: This model is like a "scissors hand", which extracts the most important sentences or phrases directly from the original text and then splices them into a summary. The advantage is that the accuracy of the original text is guaranteed, but it may lack fluency.

- Abstractive Summarization: This model is more like an "understood", which first understands the original content and then reorganizes and generates the summary in a completely new language. Its advantage is that the summary is smoother and more natural, and can even contain words that do not appear directly in the original text, but its disadvantage is that there may be "illusions" (i.e., generating unreal content), or bias in understanding. For PHP combined with AI, we usually tend to use generative models because they provide a more natural summary. OpenAI's GPT series is a typical generative model.

- Cost and performance: Different API services charge different standards, some are based on the number of words, and some are based on the number of requests. At the same time, the response speed of the model and the quality of the generated summary are also key. You need to weigh the budget and the requirements for summary quality. Large models like OpenAI usually have good results, but may be relatively expensive.

- Language Support: If your application needs to process multilingual text, make sure the AI model you choose supports the language you want.

- Ease of use and documentation: Whether the API documentation is clear and whether there is a PHP SDK (although I used the original curl before, it would be more convenient to have an SDK), these will affect development efficiency.

- Data Privacy and Security: Especially when processing sensitive information, you need to understand the data processing policies of AI service providers to ensure compliance with regulatory requirements.

- Model customization: Some AI services allow you to fine-tuning the model to suit the summary needs of a specific field or style. But this operation is usually more complicated and costly.

What challenges and strategies may be encountered when PHP integrates AI digest functions?

When actually integrating AI summary functions into PHP applications, you will find that this is not just as simple as writing a few lines of code, but you will always encounter some unexpected problems. I remember one time, because the API's rate limit was not taken into account, the system crashed directly, which was really a mess.

Common challenges and my coping strategies:

- API Rate Limiting: Most AI services will limit your request frequency and concurrency.

- Coping strategy: Implement a retry mechanism, such as Exponential Backoff. If the first request fails, wait for a short period of time and try again, and the waiting time will double after each failure. In addition, consider using queues (such as RabbitMQ, Redis List) to process digest requests asynchronously to avoid PHP main process blocking and API overloading.

- Network Delay and Timeout: Calling external APIs always has the risk of network instability, which may cause request timeout.

- Coping policy: Set a reasonable CURL request timeout time. At the same time, as mentioned above, asynchronous processing can alleviate this problem. Even if the API response is slow, it will not affect the user interface's immediate response.

- Error handling and logging: The error messages returned by the API may vary, from authentication failure to the input text being too long.

- Coping strategy: parse the error code and error information returned by the API in detail, and give user-friendly prompts or perform internal processing according to different error types. Be sure to keep logging to facilitate troubleshooting.

- Input text length limit: Most AI models have limits on text length for a single request (such as OpenAI's token limit).

- Coping strategy: For ultra-long text, you need to "chunking" it. It can be divided by paragraphs, fixed word count or token number. You can then summarize each block individually, or a little more advanced, summarize each block and then quadraticly summarize these "small digests" until the target length is reached. This requires some logical design.

- Cost Management: If you are not careful, the AI API's call costs may exceed expectations.

- Coping strategy: Monitor API usage and set budget reminders. For content used frequently, you can consider cached summary results. If a long text has been digested, the cached result will be returned directly the next time you request it.

- Uncontrollable summary quality: The quality of summary generated by AI models sometimes fluctuates or does not exactly meet your expectations.

- Coping strategy: fine-tune the generation results by adjusting API request parameters (such as

temperature,top_p, etc.). More importantly, give AI clear instructions in the prompt word (prompt), such as "Please generate a concise, objective, and core perspective summary, with the number of words within 100 words." For key scenarios, manual review or user feedback mechanisms may be required to continuously optimize.

- Coping strategy: fine-tune the generation results by adjusting API request parameters (such as

- Security: Sending sensitive data to third-party AI services requires caution.

- Coping strategy: Make sure that the API key is not leaked and do not hardcode it in public code. Consider using environment variables or key management services. Where possible, the sent text is desensitized.

It takes a little patience and practice to deal with these challenges, but ultimately you will find that the combination of PHP and AI can bring powerful new capabilities to your application.

The above is the detailed content of How to use PHP combined with AI to achieve automatic summary. PHP long articles quickly generate summary. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Ethena treasury strategy: the rise of the third empire of stablecoin

Jul 30, 2025 pm 08:12 PM

Ethena treasury strategy: the rise of the third empire of stablecoin

Jul 30, 2025 pm 08:12 PM

The real use of battle royale in the dual currency system has not yet happened. Conclusion In August 2023, the MakerDAO ecological lending protocol Spark gave an annualized return of $DAI8%. Then Sun Chi entered in batches, investing a total of 230,000 $stETH, accounting for more than 15% of Spark's deposits, forcing MakerDAO to make an emergency proposal to lower the interest rate to 5%. MakerDAO's original intention was to "subsidize" the usage rate of $DAI, almost becoming Justin Sun's Solo Yield. July 2025, Ethe

Why is Bitcoin with a ceiling? Why is the maximum number of Bitcoins 21 million

Jul 30, 2025 pm 10:30 PM

Why is Bitcoin with a ceiling? Why is the maximum number of Bitcoins 21 million

Jul 30, 2025 pm 10:30 PM

The total amount of Bitcoin is 21 million, which is an unchangeable rule determined by algorithm design. 1. Through the proof of work mechanism and the issuance rule of half of every 210,000 blocks, the issuance of new coins decreased exponentially, and the additional issuance was finally stopped around 2140. 2. The total amount of 21 million is derived from summing the equal-scale sequence. The initial reward is 50 bitcoins. After each halving, the sum of the sum converges to 21 million. It is solidified by the code and cannot be tampered with. 3. Since its birth in 2009, all four halving events have significantly driven prices, verified the effectiveness of the scarcity mechanism and formed a global consensus. 4. Fixed total gives Bitcoin anti-inflation and digital yellow metallicity, with its market value exceeding US$2.1 trillion in 2025, becoming the fifth largest capital in the world

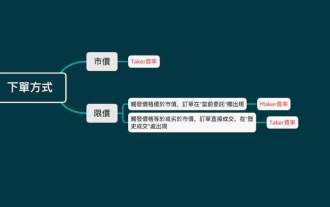

What are Maker and Taker? How to calculate the handling fee? A list of handling fees on popular exchanges

Jul 30, 2025 pm 09:33 PM

What are Maker and Taker? How to calculate the handling fee? A list of handling fees on popular exchanges

Jul 30, 2025 pm 09:33 PM

What are directory Maker and pending orders? How to calculate MakerFee? MakerFee calculation formula. Is it necessary to use limit orders? Taker and eat orders? How to calculate TakerFee How to determine whether you are Maker or Taker lazy package – Maker/taker fee spot maker/taker fee lazy package contract maker/taker fee lazy package How to reduce Maker/taker transaction fees for various virtual currency exchanges

What is Binance Treehouse (TREE Coin)? Overview of the upcoming Treehouse project, analysis of token economy and future development

Jul 30, 2025 pm 10:03 PM

What is Binance Treehouse (TREE Coin)? Overview of the upcoming Treehouse project, analysis of token economy and future development

Jul 30, 2025 pm 10:03 PM

What is Treehouse(TREE)? How does Treehouse (TREE) work? Treehouse Products tETHDOR - Decentralized Quotation Rate GoNuts Points System Treehouse Highlights TREE Tokens and Token Economics Overview of the Third Quarter of 2025 Roadmap Development Team, Investors and Partners Treehouse Founding Team Investment Fund Partner Summary As DeFi continues to expand, the demand for fixed income products is growing, and its role is similar to the role of bonds in traditional financial markets. However, building on blockchain

What is Bitcoin Taproot Upgrade? What are the benefits of Taproot?

Jul 30, 2025 pm 08:27 PM

What is Bitcoin Taproot Upgrade? What are the benefits of Taproot?

Jul 30, 2025 pm 08:27 PM

Directory What is Bitcoin? How does Bitcoin work? Why is Bitcoin not scalable? What is BIP (Bitcoin Improvement Proposal)? What is Bitcoin Taproot Update? Pay to Taproot (P2TR): Benefits of Taproot: Space-saving privacy advantages Security upgrade conclusion: ?Bitcoin is the first digital currency that can send and receive funds without using a third party. Since Bitcoin is software, like any other software, it needs updates and bug fixes. Bitcoin Taproot is such an update that introduces new features to Bitcoin. Cryptocurrency is a hot topic now. People have been talking about it for years, but now with prices rising rapidly, suddenly everyone decides to join and invest in them. Message

Solana and the founders of Base Coin start a debate: the content on Zora has 'basic value'

Jul 30, 2025 pm 09:24 PM

Solana and the founders of Base Coin start a debate: the content on Zora has 'basic value'

Jul 30, 2025 pm 09:24 PM

A verbal battle about the value of "creator tokens" swept across the crypto social circle. Base and Solana's two major public chain helmsmans had a rare head-on confrontation, and a fierce debate around ZORA and Pump.fun instantly ignited the discussion craze on CryptoTwitter. Where did this gunpowder-filled confrontation come from? Let's find out. Controversy broke out: The fuse of Sterling Crispin's attack on Zora was DelComplex researcher Sterling Crispin publicly bombarded Zora on social platforms. Zora is a social protocol on the Base chain, focusing on tokenizing user homepage and content

Why do you say you choose altcoins in a bull market and buy BTC in a bear market

Jul 30, 2025 pm 10:27 PM

Why do you say you choose altcoins in a bull market and buy BTC in a bear market

Jul 30, 2025 pm 10:27 PM

The strategy of choosing altcoins in a bull market, and buying BTC in a bear market is established because it is based on the cyclical laws of market sentiment and asset attributes: 1. In the bull market, altcoins are prone to high returns due to their small market value, narrative-driven and liquidity premium; 2. In the bear market, Bitcoin has become the first choice for risk aversion due to scarcity, liquidity and institutional consensus; 3. Historical data shows that the increase in the bull market altcoins in 2017 far exceeded that of Bitcoin, and the decline in the bear market in 2018 was also greater. In 2024, funds in the volatile market will be further concentrated in BTC; 4. Risk control needs to be vigilant about manipulating traps, buying at the bottom and position management. It is recommended that the position of altcoins in a bull market shall not exceed 30%, and the position holdings of BTC in a bear market can be increased to 70%; 5. In the future, due to institutionalization, technological innovation and macroeconomic environment, the strategy needs to be dynamically adjusted to adapt to market evolution.

What is Zircuit (ZRC currency)? How to operate? ZRC project overview, token economy and prospect analysis

Jul 30, 2025 pm 09:15 PM

What is Zircuit (ZRC currency)? How to operate? ZRC project overview, token economy and prospect analysis

Jul 30, 2025 pm 09:15 PM

Directory What is Zircuit How to operate Zircuit Main features of Zircuit Hybrid architecture AI security EVM compatibility security Native bridge Zircuit points Zircuit staking What is Zircuit Token (ZRC) Zircuit (ZRC) Coin Price Prediction How to buy ZRC Coin? Conclusion In recent years, the niche market of the Layer2 blockchain platform that provides services to the Ethereum (ETH) Layer1 network has flourished, mainly due to network congestion, high handling fees and poor scalability. Many of these platforms use up-volume technology, multiple transaction batches processed off-chain