Technology peripherals

Technology peripherals

AI

AI

Open-Sora comprehensive open source upgrade: supports 16s video generation and 720p resolution

Open-Sora comprehensive open source upgrade: supports 16s video generation and 720p resolution

Open-Sora comprehensive open source upgrade: supports 16s video generation and 720p resolution

Apr 25, 2024 pm 02:55 PMOpen-Sora has been quietly updated in the open source community. It now supports video generation up to 16 seconds, with resolutions up to 720p, and can handle any aspect ratio of text to image, text to Video, image to video, video to video and infinite length video generation needs. Let's try it out.

Generate a horizontal Christmas snow scene and post it to the b site

Generate a vertical screen and tremble The sound

can also generate a 16-second long video, now everyone can become addicted to screenwriting

How to play? Guidance

GitHub: https://github.com/hpcaitech/Open-Sora

What’s even cooler is that Open-Sora is still All open source, including the latest model architecture, the latest model weights, multi-time/resolution/aspect ratio/frame rate training process, complete process of data collection and preprocessing, all training details, demo examples and Detailed getting started tutorial.

Comprehensive interpretation of Open-Sora technical report

Overview of the latest features

The author team officially released the Open-Sora technical report [1] on GitHub. According to the author’s understanding, this update mainly includes the following key features:

- Support long video generation;

- Video generation resolution up to 720p;

- Single model supports any aspect ratio, different resolutions and The generation needs of long-duration text to image, text to video, image to video, video to video and infinite video;

- proposes a more stable model architecture design to support multi-time/ Resolution/aspect ratio/frame rate training;

- open source the latest automatic data processing process.

Space-time diffusion model ST-DiT-2

The author team stated that they are interested in Open-Sora The STDiT architecture in 1.0 has undergone key improvements aimed at improving the model’s training stability and overall performance. For the current sequence prediction task, the team adopted the best practices of large language models (LLM) and replaced the sinusoidal positional encoding in temporal attention with the more efficient rotational positional encoding (RoPE embedding). In addition, in order to enhance the stability of training, they referred to the SD3 model architecture and further introduced QK normalization technology to enhance the stability of half-precision training. In order to support the training requirements of multiple resolutions, different aspect ratios and frame rates, the ST-DiT-2 architecture proposed by the author's team can automatically scale position encoding and handle inputs of different sizes.

Multi-stage training

According to the Open-Sora technical report, Open-Sora adopts a multi-stage Training method, each stage will continue training based on the weights of the previous stage. Compared with single-stage training, this multi-stage training achieves the goal of high-quality video generation more efficiently by introducing data step by step.

In the initial stage, most videos use 144p resolution, and are mixed with pictures and 240p, 480p videos for training. The training lasts about 1 week, with a total step size of 81k. In the second stage, the resolution of most video data is increased to 240p and 480p, the training time is 1 day, and the step size reaches 22k. The third stage was further enhanced to 480p and 720p, the training duration was 1 day, and the training of 4k steps was completed. The entire multi-stage training process was completed in about 9 days. Compared with Open-Sora1.0, the quality of video generation has been improved in multiple dimensions.

Unified image-based video/video-based video framework

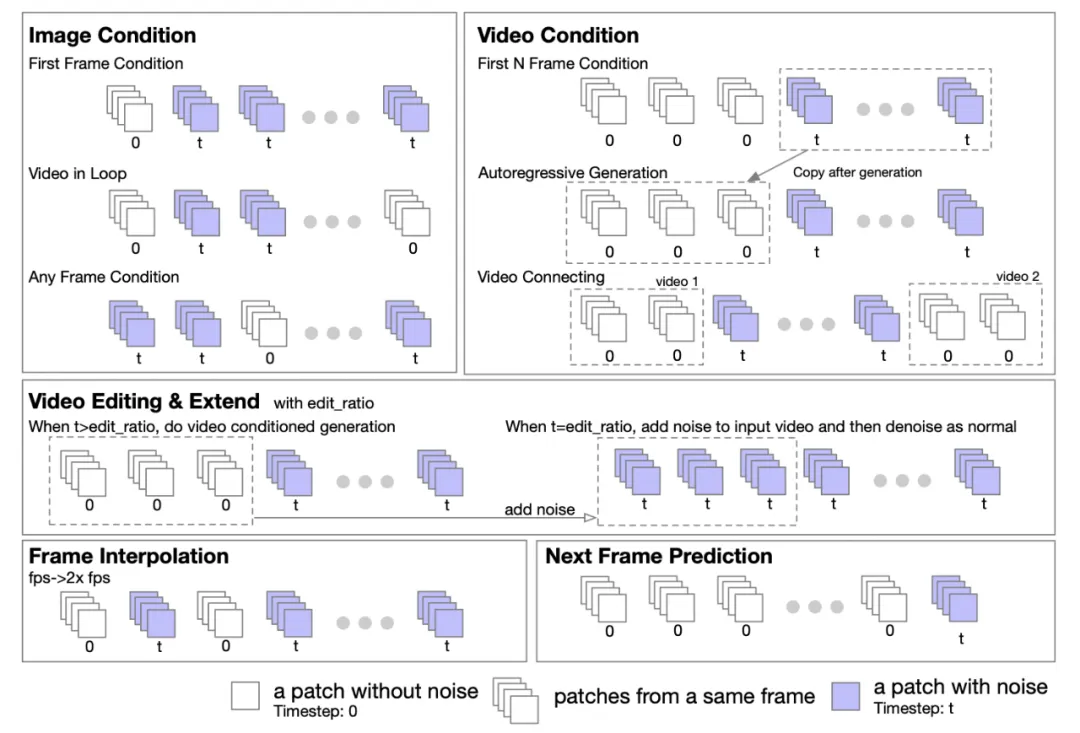

The author team stated that based on the characteristics of Transformer, it can Easily extend the DiT architecture to support image-to-image and video-to-video tasks. They proposed a masking strategy to support conditional processing of images and videos. By setting different masks, various generation tasks can be supported, including: graphic video, loop video, video extension, video autoregressive generation, video connection, video editing, frame insertion, etc.

Mask strategy that supports image and video conditional processing

The author team stated that inspired by the UL2[2] method, they introduced a random masking strategy in the model training stage. Specifically, the frames that are masked are selected and unmasked in a random manner during the training process, including but not limited to unmasking the first frame, the first k frames, the next k frames, any k frames, etc. The authors also revealed to us that based on experiments with Open-Sora 1.0, when applying the masking strategy with 50% probability, the model can better learn to handle image conditioning with only a small number of steps. In the latest version of Open-Sora, they adopted a method of pre-training from scratch using a masking strategy.

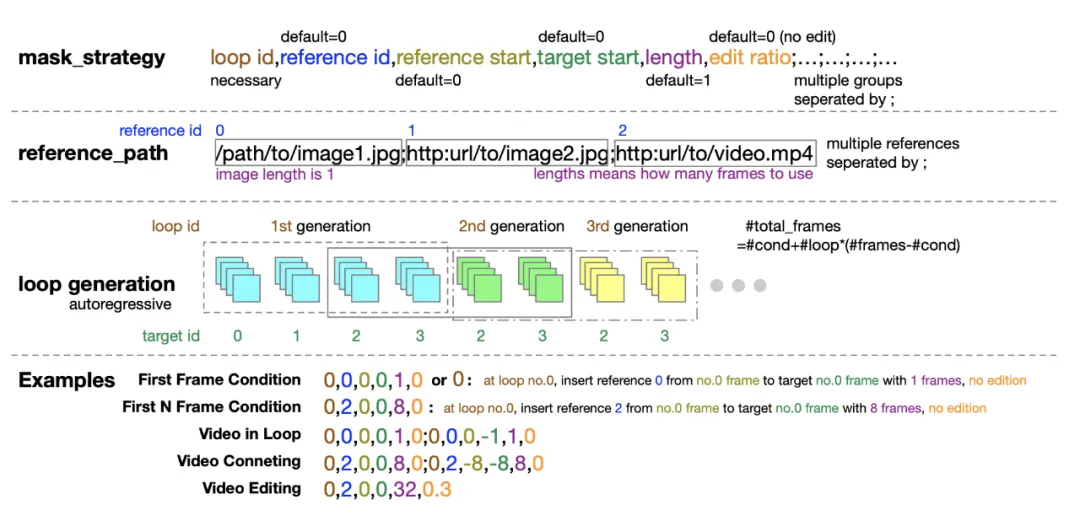

In addition, the author team also thoughtfully provides a detailed guide for masking policy configuration for the inference stage. The tuple form of five numbers provides great help when defining the masking policy. Flexibility and control.

Mask policy configuration instructions

Support multiple time/resolution/length and width Ratio/frame rate training

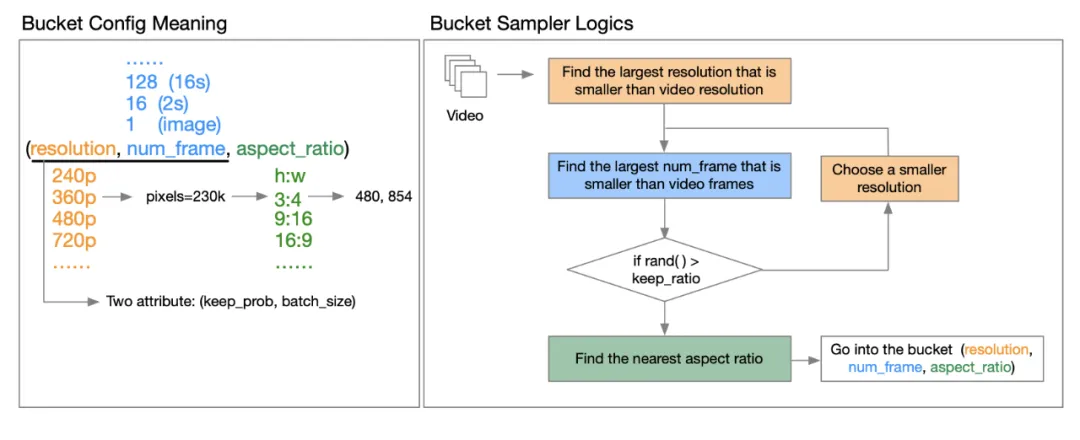

OpenAI Sora’s technical report [3] points out that training using the resolution, aspect ratio, and length of the original video can increase sampling flexibility, Improve framing and composition. In this regard, the author team proposed a bucketing strategy.

How to implement it specifically? Through in-depth reading of the technical report published by the author, we learned that the so-called bucket is a triplet of (resolution, number of frames, aspect ratio). The team has predefined a range of aspect ratios for videos at different resolutions to cover most common video aspect ratio types. Before the start of each training cycle epoch, they reshuffle the data set and assign the samples to the corresponding buckets based on their characteristics. Specifically, they put each sample into a bucket whose resolution and frame length are less than or equal to that video feature.

Open-Sora bucketing strategy

The author team further revealed that in order to reduce computing resource requirements , they introduce two attributes (resolution, number of frames) for each keep_prob and batch_size to reduce computational costs and enable multi-stage training. This way they can control the number of samples in different buckets and balance the GPU load by searching for a good batch size for each bucket. The author elaborates on this in the technical report. Interested friends can read the technical report published by the author on GitHub to get more information: https://github.com/hpcaitech/Open-Sora

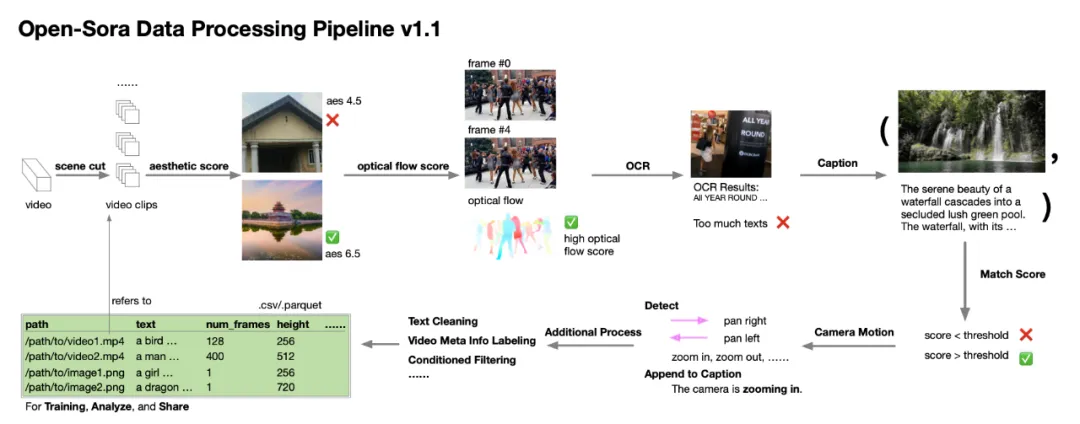

Data collection and pre-processing process

The author team even provides detailed guidance on the data collection and processing steps. According to the author's explanation in the technical report, during the development process of Open-Sora 1.0, they realized that the quantity and quality of data are extremely critical to cultivating a high-performance model, so they devoted themselves to expanding and optimizing the data set. They established an automated data processing process that follows the singular value decomposition (SVD) principle and covers scene segmentation, subtitle processing, diversity scoring and filtering, as well as the management system and specification of the data set. Likewise, they selflessly share data processing related scripts to the open source community. Interested developers can now use these resources, combined with technical reports and code, to efficiently process and optimize their own data sets.

Open-Sora data processing process

Open-Sora performance comprehensive evaluation

Video generation effect display

The most eye-catching highlight of Open-Sora is that it can transform the scene in your mind, Capture and convert text descriptions into moving videos. The images and imaginations that flash through your mind can now be permanently recorded and shared with others. Here, the author tried several different prompts as a starting point.

For example, the author tried to generate a video of visiting a winter forest. Not long after the snow fell, the pine trees were covered with white snow. Dark pine needles and white snowflakes were scattered in clear layers.

Or, in a quiet night, you are in a dark forest like those described in countless fairy tales, with the deep lake sparkling under the bright stars all over the sky.

The night view overlooking the prosperous island from the air is even more beautiful. The warm yellow lights and the ribbon-like blue water make people instantly mesmerized. Pull into the leisurely time of vacation.

#The city is bustling with traffic, and the high-rise buildings and street shops with lights still on late at night have a different flavor.

In addition to scenery, Open-Sora can also restore various natural creatures. Whether it's a bright red flower,

or a chameleon slowly turning its head, Open-Sora can generate more realistic videos.

The author also tried a variety of prompt tests and provided many generated videos for your reference, including different content, different resolutions, and different Aspect ratio, different duration.

#The author also found that Open-Sora can generate multi-resolution video clips with just one simple command, completely breaking the creative restrictions.

Resolution: 16*240p

We can also feed Open-Sora a static image to generate a short video

Open-Sora can also cleverly connect two static images. Tap the video below to take you to experience the afternoon At dusk, the light and shadow change, and every frame is a poem of time.

For another example, if we want to edit the original video, with just a simple command, the originally bright forest ushered in a heavy snowfall.

We can also use Open-Sora to generate high-definition images

It is worth noting that Open-Sora’s model weights are completely free It's public on their open source community, so you might as well download it and give it a try. Since they also support the video splicing function, this means you have the opportunity to create a short short story with a story for free to bring your creativity into reality.

Weight download address: https://github.com/hpcaitech/Open-Sora

Current limitations and future plans

Although good progress has been made in reproducing Sora-like video models, the author team also humbly points out that the currently generated videos still need to be improved in many aspects: including the generation process noise issues, lack of temporal consistency, poor character generation quality, and low aesthetic scores. Regarding these challenges, the author team stated that they will give priority to solving them in the development of the next version in order to achieve higher video generation standards. Interested friends may wish to continue to pay attention. We look forward to the next surprise that the Open-Sora community brings to us.

Open source address: https://github.com/hpcaitech/Open-Sora

The above is the detailed content of Open-Sora comprehensive open source upgrade: supports 16s video generation and 720p resolution. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

How do I view the commit history of my Git repository?

Jul 13, 2025 am 12:07 AM

How do I view the commit history of my Git repository?

Jul 13, 2025 am 12:07 AM

To view Git commit history, use the gitlog command. 1. The basic usage is gitlog, which can display the submission hash, author, date and submission information; 2. Use gitlog--oneline to obtain a concise view; 3. Filter by author or submission information through --author and --grep; 4. Add -p to view code changes, --stat to view change statistics; 5. Use --graph and --all to view branch history, or use visualization tools such as GitKraken and VSCode.

How do I delete a Git branch?

Jul 13, 2025 am 12:02 AM

How do I delete a Git branch?

Jul 13, 2025 am 12:02 AM

To delete a Git branch, first make sure it has been merged or no retention is required. Use gitbranch-d to delete the local merged branch. If you need to force delete unmerged branches, use the -D parameter. Remote branch deletion uses the gitpushorigin-deletebranch-name command, and can synchronize other people's local repositories through gitfetch-prune. 1. To delete the local branch, you need to confirm whether it has been merged; 2. To delete the remote branch, you need to use the --delete parameter; 3. After deletion, you should verify whether the branch is successfully removed; 4. Communicate with the team to avoid accidentally deleting shared branches; 5. Clean useless branches regularly to keep the warehouse clean.

How to identify fake altcoins? Teach you to avoid cryptocurrency fraud

Jul 15, 2025 pm 10:36 PM

How to identify fake altcoins? Teach you to avoid cryptocurrency fraud

Jul 15, 2025 pm 10:36 PM

To identify fake altcoins, you need to start from six aspects. 1. Check and verify the background of the materials and project, including white papers, official websites, code open source addresses and team transparency; 2. Observe the online platform and give priority to mainstream exchanges; 3. Beware of high returns and people-pulling modes to avoid fund traps; 4. Analyze the contract code and token mechanism to check whether there are malicious functions; 5. Review community and media operations to identify false popularity; 6. Follow practical anti-fraud suggestions, such as not believing in recommendations or using professional wallets. The above steps can effectively avoid scams and protect asset security.

How do I add a subtree to my Git repository?

Jul 16, 2025 am 01:48 AM

How do I add a subtree to my Git repository?

Jul 16, 2025 am 01:48 AM

To add a subtree to a Git repository, first add the remote repository and get its history, then merge it into a subdirectory using the gitmerge and gitread-tree commands. The steps are as follows: 1. Use the gitremoteadd-f command to add a remote repository; 2. Run gitmerge-srecursive-no-commit to get branch content; 3. Use gitread-tree--prefix= to specify the directory to merge the project as a subtree; 4. Submit changes to complete the addition; 5. When updating, gitfetch first and repeat the merging and steps to submit the update. This method keeps the external project history complete and easy to maintain.

What is Useless Coin? Overview of USELESS currency usage, outstanding features and future growth potential

Jul 24, 2025 pm 11:54 PM

What is Useless Coin? Overview of USELESS currency usage, outstanding features and future growth potential

Jul 24, 2025 pm 11:54 PM

What are the key points of the catalog? UselessCoin: Overview and Key Features of USELESS The main features of USELESS UselessCoin (USELESS) Future price outlook: What impacts the price of UselessCoin in 2025 and beyond? Future Price Outlook Core Functions and Importances of UselessCoin (USELESS) How UselessCoin (USELESS) Works and What Its Benefits How UselessCoin Works Major Advantages About USELESSCoin's Companies Partnerships How they work together

What is the code number of Bitcoin? What style of code is Bitcoin?

Jul 22, 2025 pm 09:51 PM

What is the code number of Bitcoin? What style of code is Bitcoin?

Jul 22, 2025 pm 09:51 PM

As a pioneer in the digital world, Bitcoin’s unique code name and underlying technology have always been the focus of people’s attention. Its standard code is BTC, also known as XBT on certain platforms that meet international standards. From a technical point of view, Bitcoin is not a single code style, but a huge and sophisticated open source software project. Its core code is mainly written in C and incorporates cryptography, distributed systems and economics principles, so that anyone can view, review and contribute its code.

Completed python blockbuster online viewing entrance python free finished website collection

Jul 23, 2025 pm 12:36 PM

Completed python blockbuster online viewing entrance python free finished website collection

Jul 23, 2025 pm 12:36 PM

This article has selected several top Python "finished" project websites and high-level "blockbuster" learning resource portals for you. Whether you are looking for development inspiration, observing and learning master-level source code, or systematically improving your practical capabilities, these platforms are not to be missed and can help you grow into a Python master quickly.

What is AMA in the currency circle? How to judge the authenticity of the project?

Jul 11, 2025 pm 08:39 PM

What is AMA in the currency circle? How to judge the authenticity of the project?

Jul 11, 2025 pm 08:39 PM

AMA in the currency circle is the abbreviation of Ask Me Anything, which is literally translated as "ask me any questions". This is a form of interaction between project parties and community members. Project teams usually broadcast live on specific platforms, such as Telegram groups, Discord servers, or via Twitter Spaces, to open questions to participants. Community members can take this opportunity to directly raise questions about any aspects such as technology, economic model, marketing promotion, roadmap, etc. to the core members of the project.