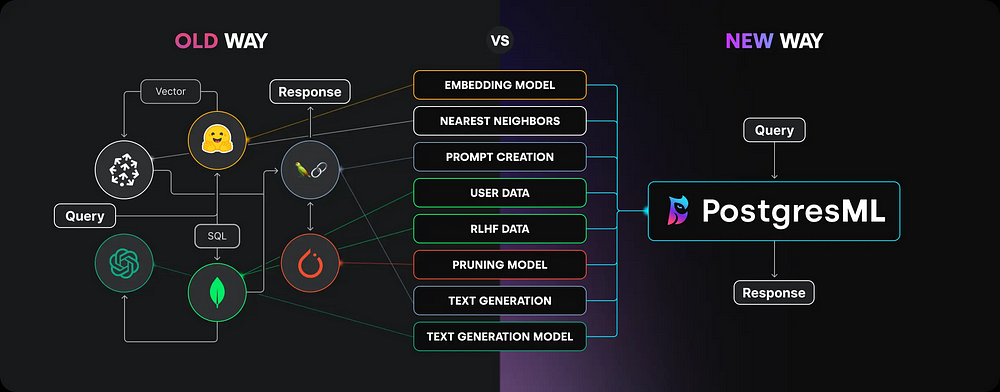

The prevailing trend in machine learning involves transferring data to the model's environment for training. However, what if we reversed this process? Given that modern databases are significantly larger than machine learning models, wouldn't it be more efficient to move the models to the datasets?

This is the fundamental concept behind PostgresML – the data remains in its location, and you bring your code to the database. This inverted approach to machine learning offers numerous practical advantages that challenge conventional notions of a "database."

PostgresML: An Overview and its Advantages

PostgresML is a comprehensive machine learning platform built upon the widely-used PostgreSQL database. It introduces a novel approach called "in-database" machine learning, enabling you to execute various ML tasks within SQL without needing separate tools for each step.

Despite its relative novelty, PostgresML offers several key benefits:

- In-database ML: Trains, deploys, and runs ML models directly within your PostgreSQL database. This eliminates the need for constant data transfer between the database and external ML frameworks, enhancing efficiency and reducing latency.

- SQL API: Leverages SQL for training, fine-tuning, and deploying machine learning models. This simplifies workflows for data analysts and scientists less familiar with multiple ML frameworks.

- Pre-trained Models: Integrates seamlessly with HuggingFace, providing access to numerous pre-trained models like Llama, Falcon, Bert, and Mistral.

- Customization and Flexibility: Supports a wide range of algorithms from Scikit-learn, XGBoost, LGBM, PyTorch, and TensorFlow, allowing for diverse supervised learning tasks directly within the database.

- Ecosystem Integration: Works with any environment supporting Postgres and offers SDKs for multiple programming languages (JavaScript, Python, and Rust are particularly well-supported).

This tutorial will demonstrate these features using a typical machine learning workflow:

- Data Loading

- Data Preprocessing

- Model Training

- Hyperparameter Fine-tuning

- Production Deployment

All these steps will be performed within a Postgres database. Let's begin!

A Complete Supervised Learning Workflow with PostgresML

Getting Started: PostgresML Free Tier

- Create a free account at http://ipnx.cn/link/3349958a3e56580d4e415da345703886:

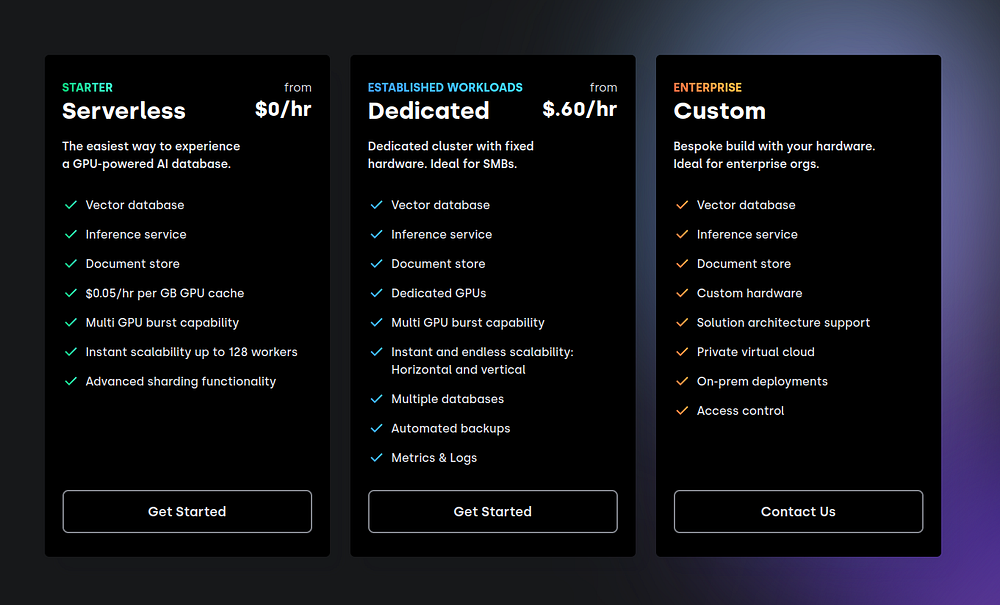

- Select the free tier, which offers generous resources:

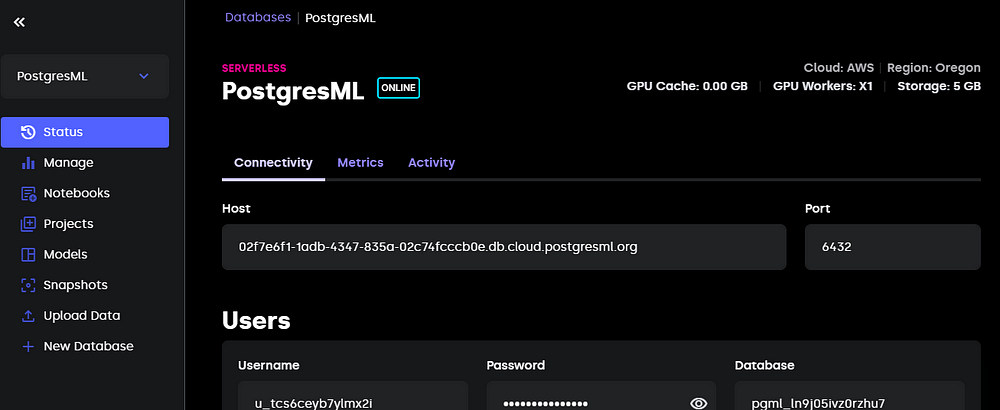

After signup, you'll access your PostgresML console for managing projects and resources.

The "Manage" section allows you to scale your environment based on computational needs.

1. Installing and Setting Up Postgres

PostgresML requires PostgreSQL. Installation guides for various platforms are available:

- Windows

- Mac OS

- Linux

For WSL2, the following commands suffice:

sudo apt update sudo apt install postgresql postgresql-contrib sudo passwd postgres # Set a new Postgres password # Close and reopen your terminal

Verify the installation:

psql --version

For a more user-friendly experience than the terminal, consider the VSCode extension.

2. Database Connection

Use the connection details from your PostgresML console:

Connect using psql:

psql -h "host" -U "username" -p 6432 -d "database_name"

Alternatively, use the VSCode extension as described in its documentation.

Enable the pgml extension:

CREATE EXTENSION IF NOT EXISTS pgml;

Verify the installation:

SELECT pgml.version();

3. Data Loading

We'll use the Diamonds dataset from Kaggle. Download it as a CSV or use this Python snippet:

import seaborn as sns

diamonds = sns.load_dataset("diamonds")

diamonds.to_csv("diamonds.csv", index=False)

Create the table:

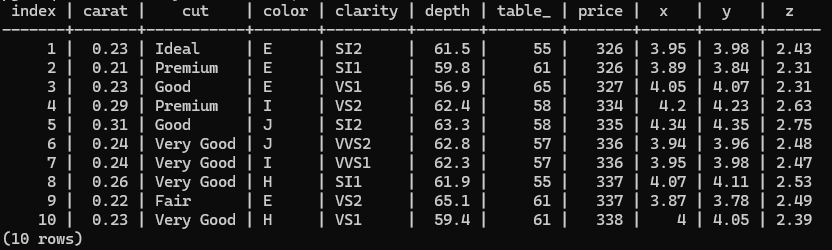

CREATE TABLE IF NOT EXISTS diamonds ( index SERIAL PRIMARY KEY, carat FLOAT, cut VARCHAR(255), color VARCHAR(255), clarity VARCHAR(255), depth FLOAT, table_ FLOAT, price INT, x FLOAT, y FLOAT, z FLOAT );

Populate the table:

INSERT INTO diamonds (carat, cut, color, clarity, depth, table_, price, x, y, z) FROM '~/full/path/to/diamonds.csv' DELIMITER ',' CSV HEADER;

Verify the data:

SELECT * FROM diamonds LIMIT 10;

4. Model Training

Basic Training

Train an XGBoost regressor:

SELECT pgml.train( project_name => 'Diamond prices prediction', task => 'regression', relation_name => 'diamonds', y_column_name => 'price', algorithm => 'xgboost' );

Train a multi-class classifier:

SELECT pgml.train( project_name => 'Diamond cut quality prediction', task => 'classification', relation_name => 'diamonds', y_column_name => 'cut', algorithm => 'xgboost', test_size => 0.1 );

Preprocessing

Train a random forest model with preprocessing:

SELECT pgml.train(

project_name => 'Diamond prices prediction',

task => 'regression',

relation_name => 'diamonds',

y_column_name => 'price',

algorithm => 'random_forest',

preprocess => '{

"carat": {"scale": "standard"},

"depth": {"scale": "standard"},

"table_": {"scale": "standard"},

"cut": {"encode": "target", "scale": "standard"},

"color": {"encode": "target", "scale": "standard"},

"clarity": {"encode": "target", "scale": "standard"}

}'::JSONB

);

PostgresML provides various preprocessing options (encoding, imputing, scaling).

Specifying Hyperparameters

Train an XGBoost regressor with custom hyperparameters:

sudo apt update sudo apt install postgresql postgresql-contrib sudo passwd postgres # Set a new Postgres password # Close and reopen your terminal

Hyperparameter Tuning

Perform a grid search:

psql --version

5. Model Evaluation

Use pgml.predict for predictions:

psql -h "host" -U "username" -p 6432 -d "database_name"

To use a specific model, specify its ID:

CREATE EXTENSION IF NOT EXISTS pgml;

Retrieve model IDs:

SELECT pgml.version();

6. Model Deployment

PostgresML automatically deploys the best-performing model. For finer control, use pgml.deploy:

import seaborn as sns

diamonds = sns.load_dataset("diamonds")

diamonds.to_csv("diamonds.csv", index=False)

Deployment strategies include best_score, most_recent, and rollback.

Further Exploration of PostgresML

PostgresML extends beyond supervised learning. The homepage features a SQL editor for experimentation. Building a consumer-facing ML service might involve:

- Creating a user interface (e.g., using Streamlit or Taipy).

- Developing a backend (Python, Node.js).

- Using libraries like

psycopg2orpg-promisefor database interaction. - Preprocessing data in the backend.

- Triggering

pgml.predictupon user interaction.

Conclusion

PostgresML offers a novel approach to machine learning. To further your understanding, explore the PostgresML documentation and consider resources like DataCamp's SQL courses and AI fundamentals tutorials.

The above is the detailed content of PostgresML Tutorial: Doing Machine Learning With SQL. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Remember the flood of open-source Chinese models that disrupted the GenAI industry earlier this year? While DeepSeek took most of the headlines, Kimi K1.5 was one of the prominent names in the list. And the model was quite cool.

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

By mid-2025, the AI “arms race” is heating up, and xAI and Anthropic have both released their flagship models, Grok 4 and Claude 4. These two models are at opposite ends of the design philosophy and deployment platform, yet they

10 Amazing Humanoid Robots Already Walking Among Us Today

Jul 16, 2025 am 11:12 AM

10 Amazing Humanoid Robots Already Walking Among Us Today

Jul 16, 2025 am 11:12 AM

But we probably won’t have to wait even 10 years to see one. In fact, what could be considered the first wave of truly useful, human-like machines is already here. Recent years have seen a number of prototypes and production models stepping out of t

Context Engineering is the 'New' Prompt Engineering

Jul 12, 2025 am 09:33 AM

Context Engineering is the 'New' Prompt Engineering

Jul 12, 2025 am 09:33 AM

Until the previous year, prompt engineering was regarded a crucial skill for interacting with large language models (LLMs). Recently, however, LLMs have significantly advanced in their reasoning and comprehension abilities. Naturally, our expectation

Build a LangChain Fitness Coach: Your AI Personal Trainer

Jul 05, 2025 am 09:06 AM

Build a LangChain Fitness Coach: Your AI Personal Trainer

Jul 05, 2025 am 09:06 AM

Many individuals hit the gym with passion and believe they are on the right path to achieving their fitness goals. But the results aren’t there due to poor diet planning and a lack of direction. Hiring a personal trainer al

6 Tasks Manus AI Can Do in Minutes

Jul 06, 2025 am 09:29 AM

6 Tasks Manus AI Can Do in Minutes

Jul 06, 2025 am 09:29 AM

I am sure you must know about the general AI agent, Manus. It was launched a few months ago, and over the months, they have added several new features to their system. Now, you can generate videos, create websites, and do much mo

Leia's Immersity Mobile App Brings 3D Depth To Everyday Photos

Jul 09, 2025 am 11:17 AM

Leia's Immersity Mobile App Brings 3D Depth To Everyday Photos

Jul 09, 2025 am 11:17 AM

Built on Leia’s proprietary Neural Depth Engine, the app processes still images and adds natural depth along with simulated motion—such as pans, zooms, and parallax effects—to create short video reels that give the impression of stepping into the sce

These AI Models Didn't Learn Language, They Learned Strategy

Jul 09, 2025 am 11:16 AM

These AI Models Didn't Learn Language, They Learned Strategy

Jul 09, 2025 am 11:16 AM

A new study from researchers at King’s College London and the University of Oxford shares results of what happened when OpenAI, Google and Anthropic were thrown together in a cutthroat competition based on the iterated prisoner's dilemma. This was no