Recently I decided that I would like to do a quick web scraping and data analysis project. Because my brain likes to come up with big ideas that would take lots of time, I decided to challenge myself to come up with something simple that could viably be done in a few hours.

Here's what I came up with:

As my undergrad degree was originally in Foreign Languages (French and Spanish), I thought it'd be fun to web scrape some language related data. I wanted to use the BeautifulSoup library, which can parse static html but isn't able to deal with dynamic web pages that need onclick events to reveal the whole dataset (ie. clicking on the next page of data if the page is paginated).

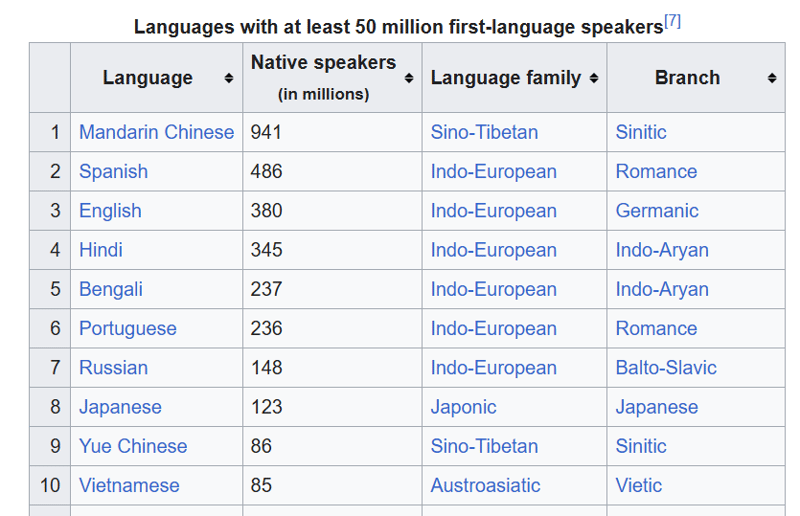

I decided on this Wikipedia page of the most commonly spoken languages.

I wanted to do the following:

- Get the html for the page and output to a .txt file

- Use beautiful soup to parse the html file and extract the table data

- Write the table to a .csv file

- Come up with 10 questions I wanted to answer for this dataset using data analysis

- Answer those questions using pandas and a Jupyter Notebook

I decided on splitting out the project into these steps for separation of concern, but also I wanted to avoid making multiple unnecessary requests to get the html from Wikipedia by rerunning the script. Saving the html file and then working with it in a separate script means that you don't need to keep re-requesting the data, as you already have it.

Project Link

The link to my github repo for this project is: https://github.com/gabrielrowan/Foreign-Languages-Analysis

Getting the html

First, I retrieved and output the html. After working with C# and C , it's always a novelty to me how short and concise Python code is ?

url = 'https://en.wikipedia.org/wiki/List_of_languages_by_number_of_native_speakers'

response = requests.get(url)

html = response.text

with open("languages_html.txt", "w", encoding="utf-8") as file:

file.write(html)

Parsing the html

To parse the html with Beautiful soup and select the table I was interested in, I did:

with open("languages_html.txt", "r", encoding="utf-8") as file:

soup = BeautifulSoup(file, 'html.parser')

# get table

top_languages_table = soup.select_one('.wikitable.sortable.static-row-numbers')

Then, I got the table header text to get the column names for my pandas dataframe:

# get column names

columns = top_languages_table.find_all("th")

column_titles = [column.text.strip() for column in columns]

After that, I created the dataframe, set the column names, retrieved each table row and wrote each row to the dataframe:

# get table rows

table_data = top_languages_table.find_all("tr")

# define dataframe

df = pd.DataFrame(columns=column_titles)

# get table data

for row in table_data[1:]:

row_data = row.find_all('td')

row_data_txt = [row.text.strip() for row in row_data]

print(row_data_txt)

df.loc[len(df)] = row_data_txt

Note - without using strip() there were n characters in the text which weren't needed.

Last, I wrote the dataframe to a .csv.

Analysing the data

In advance, I'd come up with these questions that I wanted to answer from the data:

- What is the total number of native speakers across all languages in the dataset?

- How many different types of language family are there?

- What is the total number of native speakers per language family?

- What are the top 3 most common language families?

- Create a pie chart showing the top 3 most common language families

- What is the most commonly occuring Language family - branch pair?

- Which languages are Sino-Tibetan in the table?

- Display a bar chart of the native speakers of all Romance and Germanic languages

- What percentage of total native speakers is represented by the top 5 languages?

- Which branch has the most native speakers, and which has the least?

The Results

While I won't go into the code to answer all of these questions, I will go into the 2 ones that involved charts.

Display a bar chart of the native speakers of all Romance and Germanic languages

First, I created a dataframe that only included rows where the branch name was 'Romance' or 'Germanic'

url = 'https://en.wikipedia.org/wiki/List_of_languages_by_number_of_native_speakers'

response = requests.get(url)

html = response.text

with open("languages_html.txt", "w", encoding="utf-8") as file:

file.write(html)

Then I specified the x axis, y axis and the colour of the bars that I wanted for the chart:

with open("languages_html.txt", "r", encoding="utf-8") as file:

soup = BeautifulSoup(file, 'html.parser')

# get table

top_languages_table = soup.select_one('.wikitable.sortable.static-row-numbers')

This created:

Create a pie chart showing the top 3 most common language families

To create the pie chart, I retrieved the top 3 most common language families and put these in a dataframe.

This code groups gets the total sum of native speakers per language family, sorts them in descending order, and extracts the top 3 entries.

# get column names

columns = top_languages_table.find_all("th")

column_titles = [column.text.strip() for column in columns]

Then I put the data in a pie chart, specifying the y axis of 'Native Speakers' and a legend, which creates colour coded labels for each language family shown in the chart.

# get table rows

table_data = top_languages_table.find_all("tr")

# define dataframe

df = pd.DataFrame(columns=column_titles)

# get table data

for row in table_data[1:]:

row_data = row.find_all('td')

row_data_txt = [row.text.strip() for row in row_data]

print(row_data_txt)

df.loc[len(df)] = row_data_txt

The code and responses for the rest of the questions can be found here. I used markdown in the notebook to write the questions and their answers.

Next Time:

For my next iteration of a web scraping & data analysis project, I'd like to make things more complicated with:

- Web scraping a dynamic page where more data is revealed on click/ scroll

- Analysing a much bigger dataset, potentially one that needs some data cleaning work before analysis

Final thoughts

Even though it was a quick one, I enjoyed doing this project. It reminded me how useful short, manageable projects can be for getting the practice reps in ? Plus, extracting data from the internet and creating charts from it, even with a small dataset, is fun ?

The above is the detailed content of Web scraping and analysing foreign languages data. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Polymorphism in python classes

Jul 05, 2025 am 02:58 AM

Polymorphism in python classes

Jul 05, 2025 am 02:58 AM

Polymorphism is a core concept in Python object-oriented programming, referring to "one interface, multiple implementations", allowing for unified processing of different types of objects. 1. Polymorphism is implemented through method rewriting. Subclasses can redefine parent class methods. For example, the spoke() method of Animal class has different implementations in Dog and Cat subclasses. 2. The practical uses of polymorphism include simplifying the code structure and enhancing scalability, such as calling the draw() method uniformly in the graphical drawing program, or handling the common behavior of different characters in game development. 3. Python implementation polymorphism needs to satisfy: the parent class defines a method, and the child class overrides the method, but does not require inheritance of the same parent class. As long as the object implements the same method, this is called the "duck type". 4. Things to note include the maintenance

Explain Python generators and iterators.

Jul 05, 2025 am 02:55 AM

Explain Python generators and iterators.

Jul 05, 2025 am 02:55 AM

Iterators are objects that implement __iter__() and __next__() methods. The generator is a simplified version of iterators, which automatically implement these methods through the yield keyword. 1. The iterator returns an element every time he calls next() and throws a StopIteration exception when there are no more elements. 2. The generator uses function definition to generate data on demand, saving memory and supporting infinite sequences. 3. Use iterators when processing existing sets, use a generator when dynamically generating big data or lazy evaluation, such as loading line by line when reading large files. Note: Iterable objects such as lists are not iterators. They need to be recreated after the iterator reaches its end, and the generator can only traverse it once.

How to handle API authentication in Python

Jul 13, 2025 am 02:22 AM

How to handle API authentication in Python

Jul 13, 2025 am 02:22 AM

The key to dealing with API authentication is to understand and use the authentication method correctly. 1. APIKey is the simplest authentication method, usually placed in the request header or URL parameters; 2. BasicAuth uses username and password for Base64 encoding transmission, which is suitable for internal systems; 3. OAuth2 needs to obtain the token first through client_id and client_secret, and then bring the BearerToken in the request header; 4. In order to deal with the token expiration, the token management class can be encapsulated and automatically refreshed the token; in short, selecting the appropriate method according to the document and safely storing the key information is the key.

How to iterate over two lists at once Python

Jul 09, 2025 am 01:13 AM

How to iterate over two lists at once Python

Jul 09, 2025 am 01:13 AM

A common method to traverse two lists simultaneously in Python is to use the zip() function, which will pair multiple lists in order and be the shortest; if the list length is inconsistent, you can use itertools.zip_longest() to be the longest and fill in the missing values; combined with enumerate(), you can get the index at the same time. 1.zip() is concise and practical, suitable for paired data iteration; 2.zip_longest() can fill in the default value when dealing with inconsistent lengths; 3.enumerate(zip()) can obtain indexes during traversal, meeting the needs of a variety of complex scenarios.

Explain Python assertions.

Jul 07, 2025 am 12:14 AM

Explain Python assertions.

Jul 07, 2025 am 12:14 AM

Assert is an assertion tool used in Python for debugging, and throws an AssertionError when the condition is not met. Its syntax is assert condition plus optional error information, which is suitable for internal logic verification such as parameter checking, status confirmation, etc., but cannot be used for security or user input checking, and should be used in conjunction with clear prompt information. It is only available for auxiliary debugging in the development stage rather than substituting exception handling.

What are python iterators?

Jul 08, 2025 am 02:56 AM

What are python iterators?

Jul 08, 2025 am 02:56 AM

InPython,iteratorsareobjectsthatallowloopingthroughcollectionsbyimplementing__iter__()and__next__().1)Iteratorsworkviatheiteratorprotocol,using__iter__()toreturntheiteratorand__next__()toretrievethenextitemuntilStopIterationisraised.2)Aniterable(like

What are Python type hints?

Jul 07, 2025 am 02:55 AM

What are Python type hints?

Jul 07, 2025 am 02:55 AM

TypehintsinPythonsolvetheproblemofambiguityandpotentialbugsindynamicallytypedcodebyallowingdeveloperstospecifyexpectedtypes.Theyenhancereadability,enableearlybugdetection,andimprovetoolingsupport.Typehintsareaddedusingacolon(:)forvariablesandparamete

Python FastAPI tutorial

Jul 12, 2025 am 02:42 AM

Python FastAPI tutorial

Jul 12, 2025 am 02:42 AM

To create modern and efficient APIs using Python, FastAPI is recommended; it is based on standard Python type prompts and can automatically generate documents, with excellent performance. After installing FastAPI and ASGI server uvicorn, you can write interface code. By defining routes, writing processing functions, and returning data, APIs can be quickly built. FastAPI supports a variety of HTTP methods and provides automatically generated SwaggerUI and ReDoc documentation systems. URL parameters can be captured through path definition, while query parameters can be implemented by setting default values ??for function parameters. The rational use of Pydantic models can help improve development efficiency and accuracy.