You are not sure what the robots.txt file is? Do you need to make changes to your robots.txt file but don't know how to access it?

robots.txt The file is crucial for any website as it helps control the behavior of search engines and other web robots. This text file acts as a set of instructions that tells search engines and other robots which pages or sections of your website should be crawled or indexed.

In this article, we will discuss finding and editing the robots.txt file in WordPress. Whether you want to block specific pages, allow search engines to crawl your entire site, or disable crawling of specific file types, knowing how to edit your robots.txt file is an important step in controlling your website's presence on the internet. .

By following these steps, you can quickly and easily edit the robots.txt file in your WordPress site and improve your site’s SEO and sensitive information protection.

Find and edit the robots.txt file

To make changes to your website’s robots.txt file, you first need to access it.

In this section, we’ll walk you through the steps to access the robots.txt file in WordPress so you can make changes to it.

Install WP File Manager

To find the robots.txt file, you will need to access your WordPress website’s file manager.

You can access your site’s files via FTP, but it’s easier to install the WP File Manager plugin. The plugin will display your website’s files for quick editing directly from the WordPress dashboard.

Navigate to the Plugins section in the side menu. Search for WP File Manager in the search bar. Click the Add button to access the WordPress plugin. Click Install Now and wait for the plug-in to be installed, then click the Activate button to activate your Plugins on the website.

You will now see the WP File Manager on the left sidebar of your WP dashboard.

Findrobots.txtFile

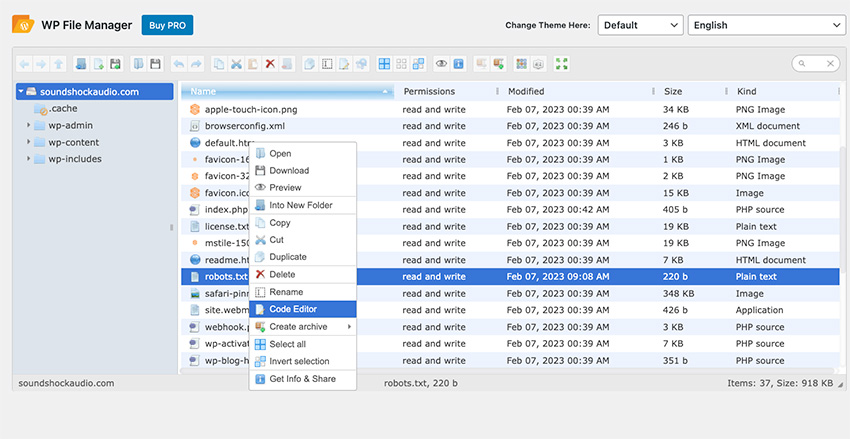

After opening the file manager in your WordPress control panel, the next step is to locate the robots.txt file. The robots.txt file is typically located in the root directory of your WordPress website and can usually be found by browsing the directory structure in your file manager. To find the file, look for the file named robots.txt and select it.

If you can't find the robots.txt file, you can also use the search function in your file manager to quickly find it. Enter "robots.txt" in the search bar and press Enter. This should display the file in search results, and you can then select it to open it in a file editor.

EditRobots.txtFile

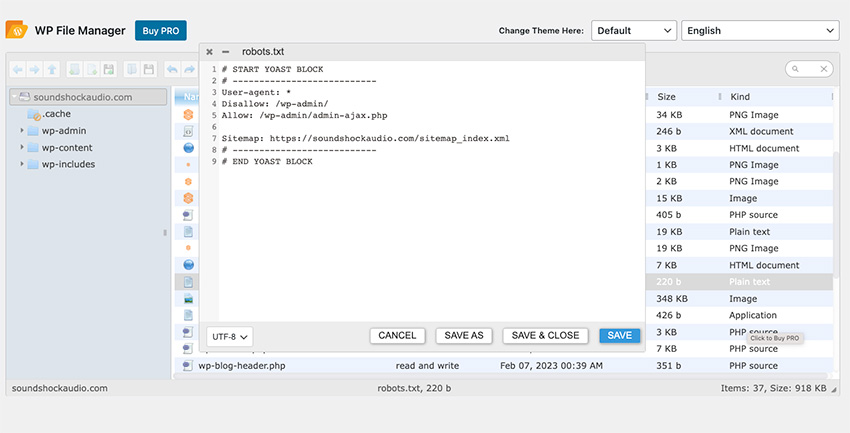

After finding the robots.txt file, right-click the file and a list of options will appear. Select the Code Editor option. A code editor will now appear where you can make the necessary changes.

After making changes to the file, click the Save and Close button. Your changes will now be saved.

It is important to check and update the robots.txt file regularly to ensure it reflects the current status of your site and provides necessary instructions to search engine robots.

Download Popular WordPress Plugins

WordPress plugins are crucial to the success of your website. The article below will help you get tons of high-quality plugins that you can add to your WP website in no time.

Quickly edit the website’s Robots.txt file

By following the steps outlined in this article, you can quickly and easily access, find, and change the robots.txt file on your WordPress site.

Whether you want to prevent search engines from indexing specific pages, allow search engines to crawl your entire site, or disable crawling of specific file types, learn how to edit robots.txt strong> Documentation is an important step in controlling your website's presence on the internet.

Check out all the great tools for WordPress and other creative projects, and get unlimited access to Envato Elements.

The above is the detailed content of Efficiently modify Robots.txt files in WordPress. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to revert WordPress core update

Jul 02, 2025 am 12:05 AM

How to revert WordPress core update

Jul 02, 2025 am 12:05 AM

To roll back the WordPress version, you can use the plug-in or manually replace the core file and disable automatic updates. 1. Use WPDowngrade and other plug-ins to enter the target version number to automatically download and replace; 2. Manually download the old version of WordPress and replace wp-includes, wp-admin and other files through FTP, but retain wp-config.php and wp-content; 3. Add code in wp-config.php or use filters to disable core automatic updates to prevent further upgrades. Be sure to back up the website and database before operation to ensure safety and reliability. It is recommended to keep the latest version for security and functional support in the long term.

How to create a custom shortcode in WordPress

Jul 02, 2025 am 12:21 AM

How to create a custom shortcode in WordPress

Jul 02, 2025 am 12:21 AM

The steps to create a custom shortcode in WordPress are as follows: 1. Write a PHP function through functions.php file or custom plug-in; 2. Use add_shortcode() to bind the function to the shortcode tag; 3. Process parameters in the function and return the output content. For example, when creating button shortcodes, you can define color and link parameters for flexible configuration. When using it, you can insert a tag like [buttoncolor="red"url="https://example.com"] in the editor, and you can use do_shortcode() to model it

How to diagnose high CPU usage caused by WordPress

Jul 06, 2025 am 12:08 AM

How to diagnose high CPU usage caused by WordPress

Jul 06, 2025 am 12:08 AM

The main reasons why WordPress causes the surge in server CPU usage include plug-in problems, inefficient database query, poor quality of theme code, or surge in traffic. 1. First, confirm whether it is a high load caused by WordPress through top, htop or control panel tools; 2. Enter troubleshooting mode to gradually enable plug-ins to troubleshoot performance bottlenecks, use QueryMonitor to analyze the plug-in execution and delete or replace inefficient plug-ins; 3. Install cache plug-ins, clean up redundant data, analyze slow query logs to optimize the database; 4. Check whether the topic has problems such as overloading content, complex queries, or lack of caching mechanisms. It is recommended to use standard topic tests to compare and optimize the code logic. Follow the above steps to check and solve the location and solve the problem one by one.

How to optimize WordPress without plugins

Jul 05, 2025 am 12:01 AM

How to optimize WordPress without plugins

Jul 05, 2025 am 12:01 AM

Methods to optimize WordPress sites that do not rely on plug-ins include: 1. Use lightweight themes, such as Astra or GeneratePress, to avoid pile-up themes; 2. Manually compress and merge CSS and JS files to reduce HTTP requests; 3. Optimize images before uploading, use WebP format and control file size; 4. Configure.htaccess to enable browser cache, and connect to CDN to improve static resource loading speed; 5. Limit article revisions and regularly clean database redundant data.

How to minify JavaScript files in WordPress

Jul 07, 2025 am 01:11 AM

How to minify JavaScript files in WordPress

Jul 07, 2025 am 01:11 AM

Miniving JavaScript files can improve WordPress website loading speed by removing blanks, comments, and useless code. 1. Use cache plug-ins that support merge compression, such as W3TotalCache, enable and select compression mode in the "Minify" option; 2. Use a dedicated compression plug-in such as FastVelocityMinify to provide more granular control; 3. Manually compress JS files and upload them through FTP, suitable for users familiar with development tools. Note that some themes or plug-in scripts may conflict with the compression function, and you need to thoroughly test the website functions after activation.

How to use the Transients API for caching

Jul 05, 2025 am 12:05 AM

How to use the Transients API for caching

Jul 05, 2025 am 12:05 AM

TransientsAPI is a built-in tool in WordPress for temporarily storing automatic expiration data. Its core functions are set_transient, get_transient and delete_transient. Compared with OptionsAPI, transients supports setting time of survival (TTL), which is suitable for scenarios such as cache API request results and complex computing data. When using it, you need to pay attention to the uniqueness of key naming and namespace, cache "lazy deletion" mechanism, and the issue that may not last in the object cache environment. Typical application scenarios include reducing external request frequency, controlling code execution rhythm, and improving page loading performance.

How to use object caching for persistent storage

Jul 03, 2025 am 12:23 AM

How to use object caching for persistent storage

Jul 03, 2025 am 12:23 AM

Object cache assists persistent storage, suitable for high access and low updates, tolerating short-term lost data. 1. Data suitable for "persistence" in cache includes user configuration, popular product information, etc., which can be restored from the database but can be accelerated by using cache. 2. Select a cache backend that supports persistence such as Redis, enable RDB or AOF mode, and configure a reasonable expiration policy, but it cannot replace the main database. 3. Set long TTL or never expired keys, adopt clear key name structure such as user:1001:profile, and update the cache synchronously when modifying data. 4. It can combine local and distributed caches to store small data locally and big data Redis to store big data and use it for recovery after restart, while paying attention to consistency and resource usage issues.

How to prevent comment spam programmatically

Jul 08, 2025 am 12:04 AM

How to prevent comment spam programmatically

Jul 08, 2025 am 12:04 AM

The most effective way to prevent comment spam is to automatically identify and intercept it through programmatic means. 1. Use verification code mechanisms (such as Googler CAPTCHA or hCaptcha) to effectively distinguish between humans and robots, especially suitable for public websites; 2. Set hidden fields (Honeypot technology), and use robots to automatically fill in features to identify spam comments without affecting user experience; 3. Check the blacklist of comment content keywords, filter spam information through sensitive word matching, and pay attention to avoid misjudgment; 4. Judge the frequency and source IP of comments, limit the number of submissions per unit time and establish a blacklist; 5. Use third-party anti-spam services (such as Akismet, Cloudflare) to improve identification accuracy. Can be based on the website