Is Hallucination in Large Language Models (LLMs) Inevitable?

Apr 15, 2025 am 11:31 AMLarge Language Models (LLMs) and the Inevitable Problem of Hallucinations

You've likely used AI models like ChatGPT, Claude, and Gemini. These are all examples of Large Language Models (LLMs), powerful AI systems trained on massive text datasets to understand and generate human-like text. However, even the most advanced LLMs suffer from a significant flaw: hallucinations.

Recent research, notably "Hallucination is Inevitable: An Innate Limitation of Large Language Models," argues that these hallucinations – the confident presentation of fabricated information – are an inherent limitation, not a mere bug. This article explores this research and its implications.

Understanding LLMs and Hallucinations

LLMs, while impressive, struggle with "hallucinations," generating plausible-sounding but factually incorrect information. This raises serious concerns about their reliability and ethical implications. The research paper categorizes hallucinations as either intrinsic (contradicting input) or extrinsic (unverifiable by input). Causes are multifaceted, stemming from data quality issues (bias, misinformation, outdated information), training flaws (architectural limitations, exposure bias), and inference problems (sampling randomness).

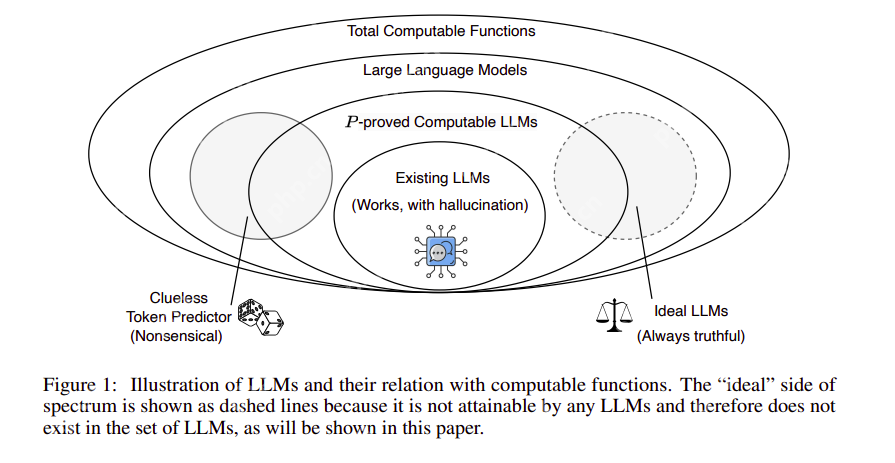

The Inevitability of Hallucination

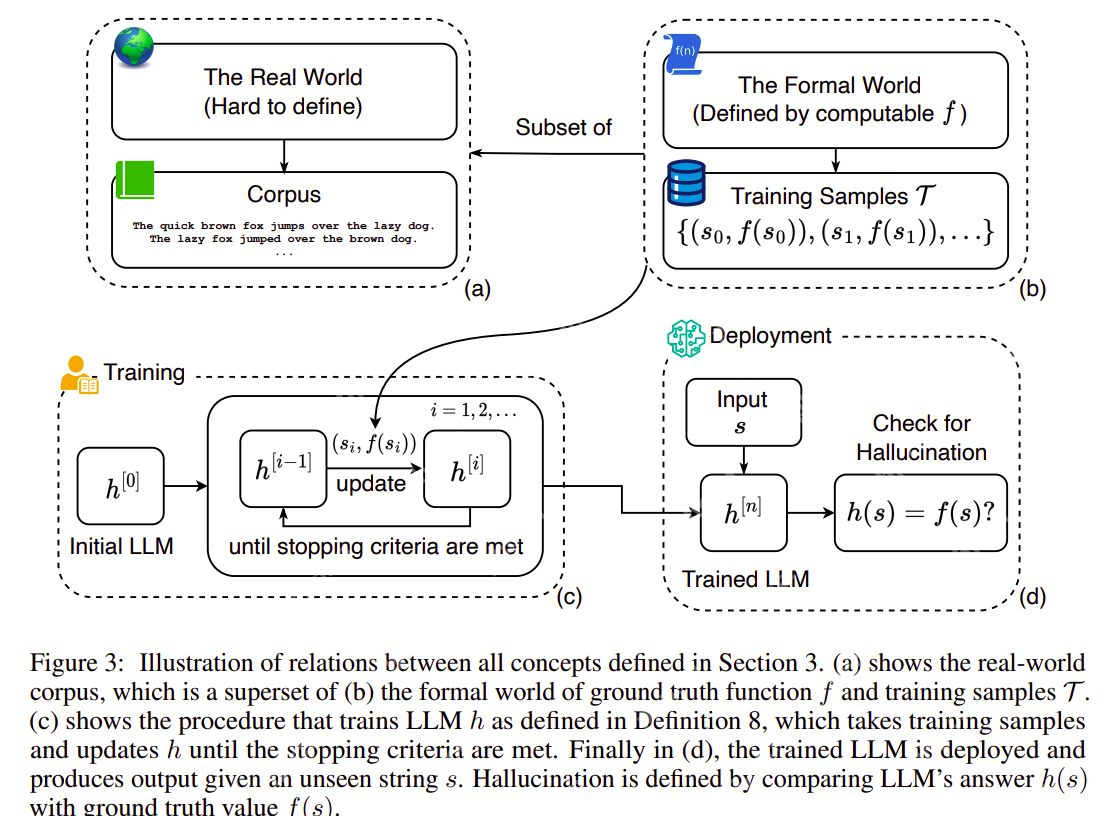

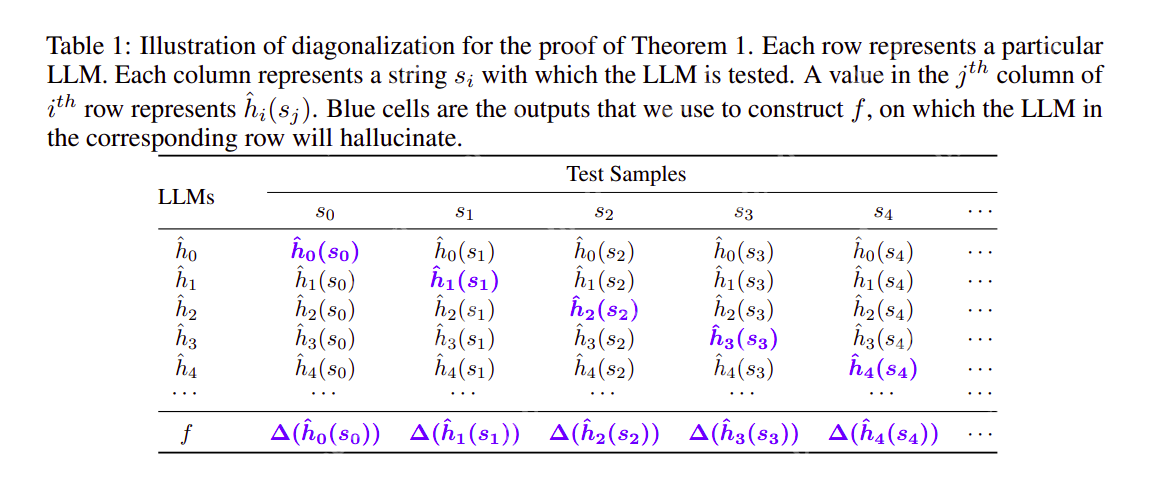

The core argument of the research is that hallucination is unavoidable in any computable LLM. The paper uses mathematical proofs (Theorems 1, 2, and 3) to demonstrate this, showing that even with perfect training data and optimal architecture, limitations in computability will inevitably lead to incorrect outputs. This holds true even for LLMs designed for polynomial-time computation. The research highlights that even increasing model size or training data won't eliminate this fundamental limitation.

Empirical Evidence and Mitigation Strategies

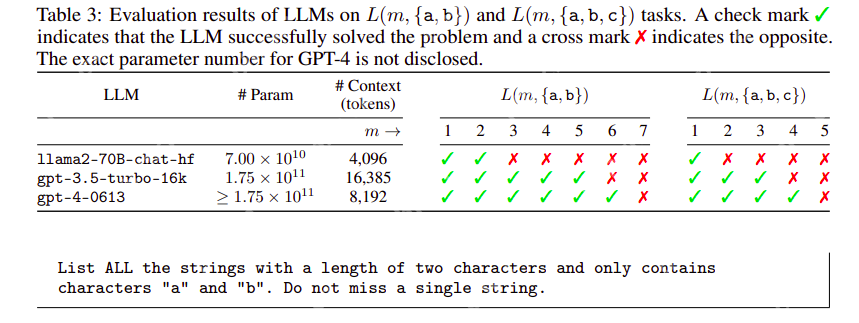

The research backs its theoretical claims with empirical evidence. Experiments using Llama 2 and GPT models demonstrated their failure to complete simple string enumeration tasks, further supporting the inevitability of hallucinations.

While complete eradication is impossible, the paper explores mitigation strategies:

- Larger Models & More Data: While helpful, this approach has inherent limits.

- Improved Prompting: Techniques like Chain-of-Thought can improve accuracy but don't solve the core problem.

- Ensemble Methods: Combining multiple LLMs can reduce errors but doesn't eliminate them.

- Safety Constraints ("Guardrails"): These can mitigate harmful outputs but don't address the fundamental issue of factual inaccuracy.

- Knowledge Integration: Incorporating external knowledge sources can improve accuracy in specific domains.

Conclusion: Responsible AI Development

The research concludes that hallucinations are an inherent characteristic of LLMs. While mitigation strategies can reduce their frequency and impact, they cannot eliminate them entirely. This necessitates a shift towards responsible AI development, focusing on:

- Transparency: Acknowledging the limitations of LLMs.

- Safety Measures: Implementing robust safeguards to minimize the risks of hallucinations.

- Human Oversight: Maintaining human control and verification of LLM outputs, especially in critical applications.

- Continued Research: Exploring new approaches to reduce hallucinations and improve the reliability of LLMs.

The future of LLMs requires a pragmatic approach, acknowledging their limitations and focusing on responsible development and deployment. The inevitability of hallucinations underscores the need for ongoing research and a critical evaluation of their applications. This isn't a call to abandon LLMs, but a call for responsible innovation.

(Frequently Asked Questions section would be added here, mirroring the original input's FAQ section.)

The above is the detailed content of Is Hallucination in Large Language Models (LLMs) Inevitable?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

Investing is booming, but capital alone isn’t enough. With valuations rising and distinctiveness fading, investors in AI-focused venture funds must make a key decision: Buy, build, or partner to gain an edge? Here’s how to evaluate each option—and pr

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). Heading Toward AGI And

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Remember the flood of open-source Chinese models that disrupted the GenAI industry earlier this year? While DeepSeek took most of the headlines, Kimi K1.5 was one of the prominent names in the list. And the model was quite cool.

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). For those readers who h

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

By mid-2025, the AI “arms race” is heating up, and xAI and Anthropic have both released their flagship models, Grok 4 and Claude 4. These two models are at opposite ends of the design philosophy and deployment platform, yet they

Chain Of Thought For Reasoning Models Might Not Work Out Long-Term

Jul 02, 2025 am 11:18 AM

Chain Of Thought For Reasoning Models Might Not Work Out Long-Term

Jul 02, 2025 am 11:18 AM

For example, if you ask a model a question like: “what does (X) person do at (X) company?” you may see a reasoning chain that looks something like this, assuming the system knows how to retrieve the necessary information:Locating details about the co

Senate Kills 10-Year State-Level AI Ban Tucked In Trump's Budget Bill

Jul 02, 2025 am 11:16 AM

Senate Kills 10-Year State-Level AI Ban Tucked In Trump's Budget Bill

Jul 02, 2025 am 11:16 AM

The Senate voted 99-1 Tuesday morning to kill the moratorium after a last-minute uproar from advocacy groups, lawmakers and tens of thousands of Americans who saw it as a dangerous overreach. They didn’t stay quiet. The Senate listened.States Keep Th

This Startup Built A Hospital In India To Test Its AI Software

Jul 02, 2025 am 11:14 AM

This Startup Built A Hospital In India To Test Its AI Software

Jul 02, 2025 am 11:14 AM

Clinical trials are an enormous bottleneck in drug development, and Kim and Reddy thought the AI-enabled software they’d been building at Pi Health could help do them faster and cheaper by expanding the pool of potentially eligible patients. But the